The higher your website ranks, the better the amount of traffic you get on the same, and ultimately there are better chances of lead conversion. If talking about the best JavaScript libraries that allow your website to be SEO-friendly, then React JS should top the list. Today we’ll clear the misconceptions of many developers and clients who believe React is not a suitable JavaScript library in terms of SEO.

In this article, we are going to talk about the key reasons to choose React js, the challenges of making the React SEO website, and the best practices to solve the same to make it SEO-friendly.

Before diving into the concept of React JS, let’s first understand what is SPA (single-page apps) and why React.Js is the best for SPA:

What is SPA?

A single-page application (SPA) is a web app implementation that is loaded through a single HTML page to be more responsive. The page then updates the body content of that single document via JavaScript APIs such as XMLHttpRequest and Fetch when different content is to be executed.

Single page applications are now the major part of website development due to their responsiveness and user-friendly flow. But when it comes to making these very single-page websites SEO-friendly; it becomes quite a challenging task. But, of course, using the best front-end JavaScript frameworks like Angular, ReactJS, and Vue, becomes quite an easy task.

Out of these popular frameworks, here we are going to talk about React. Let’s first start with some top reasons to choose React JS.

Why Should You Use React?

Here are the top reasons why should you go for React JS while developing your website:

Stability in code

When it comes to React JS, you should not be worried about code’s stability at all. Because when you have got to change something in a code, the changes will be done in that specific component; the parent structure will not be changed at all.

This is one of the key reasons why React JS is being chosen when it comes to stable code.

React offers a workable development toolset

When you are working with React JS, your process of coding is going to be simplified as you have the developer toolkit with you. With this toolkit, the process of development becomes easier and helps developers save a whole lot of time.

This toolkit can be used for both Chrome and Firefox as it is available in the form of a browser extension.

React JS is declarative

In React JS, the DOM is declarative. We can create interactive UIs along with changing the state of the component; React JS does update the DOM automatically. That means you don’t really need to interact with DOM.

Thus, creating interactive UI makes and debugging them is quite simple. All you need to do is to change the program state and see if the UI looks good. You can create the changes without worrying about DOM.

React JS allows you to speed up the development

React JS basically enables developers to use every aspect of their application on both server-side and client-side, which makes developers spend less time on coding.

Different developers can work on individual aspects of the app and the changes that are done won’t disturb the logic of the application.

Now that we have learned the importance of react js in terms of SEO and other things. Let’s start with the journey of creating an SEO-friendly web app.

What are the 3 Common Indexing Issues that are Generally Faced with JavaScript Pages?

Here are the most common challenges that occur while indexing JavaScript pages. Let’s learn more about it:

- The complex process of indexing

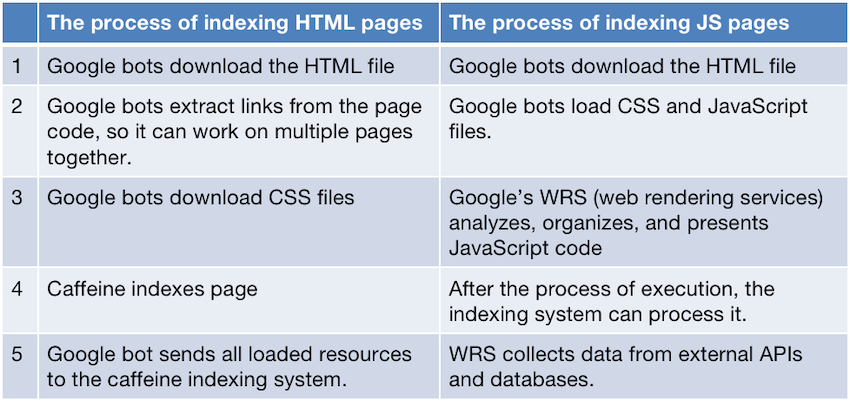

Google bots scan the HTML pages pretty faster, let’s show you how it works with HTML pages indexing and JavaScript pages indexing:

So, this is how it works for HTML web pages and JavaScript web pages, indexing JavaScript pages becomes a complex process. The indexing of JavaScript pages is only possible when these 5 steps have been followed properly, which generally takes more time than that of HTML page indexing.

- Indexing SPAs is a challenging task

The concept of a single-page application is to present one page at a time. The rest of the information is loaded when it’s required. So, you can explore the entire website on a single tab; all the pages can be gone through subsequently.

This concept is exactly the opposite of conventional multi-page apps. SPAs are faster and more responsive, and users get a seamless experience with them.

But the issue arises when it comes to SEO for single-page applications. Single-page applications generally take time to reflect the content for Google bots, if the bot is crawling the pages and it doesn’t get to see the content, it will consider it empty. If the content is not visible to Google bots, then it will leave an adverse impact on the ranking of your web app or site.

- Errors in the coding of JavaScript

JavaScript and HTML web pages are being crawled absolutely differently by Google bots, as mentioned above. A single error in the code of JavaScript can make page crawling impossible.

It’s because the JavaScript parser does not accept any single error. If both parser and character unite at an unexpected point, it stops parsing the current script at the moment and considers SyntaxError.

Thus, a minor error or typo can consider the whole script futile. If the script has some error, then the content won’t be visible to the bot and the Google bot will index it as a page without content.

Core Challenges to Making React JS Website SEO-friendly

React SEO is a challenging concept, but once it’s achieved; it helps you with great Google ranking and impeccable results. Before getting into the best practices, here are certain challenges that generally occur:

- Extra loading time

Parsing, and loading JavaScript takes a little more time. As JavaScript needs to make network calls to execute the content, the user may have to wait until he’s helped with the requested information.

The longer users have to wait for the information on the page, the lower will the Google bot rank the website.

- Sitemap

Sitemap is the file where you do mention all the information about every page of your website and also mention the connection between those pages. This leads search engines like Google to crawl your website more quickly and properly.

React does not have a built-in system to create sitemaps. If you are using React Router to handle routering, you will get to find some tools that generate sitemaps. Still, it’s a time-consuming process.

- Lack of Dynamic Metadata

Single-page applications are developed dynamically, and they provide a seamless experience to the user as the user can see all the required information on that single page itself.

But when it comes to SEO of the SPA, metadata is not updated on the spot when crawlers click on the SPA link. Thus, Google bots when crawling the website, they consider the page empty as that particular file is unable to be indexed by Google bots.

Well, the developers can fix the issue of ranking the page by creating individual pages for Google bots. Creating individual pages leads to another challenge regarding business expenses and the ranking process can also be affected a little.

How to Make Your React Website SEO-friendly?

Here with these 2 options, you can make your React JS website rank higher on search engines to reach out to your target audiences, let’s learn more about it:

- Isomorphic React Apps

Isomorphic React applications can be worked on both the server-side and client-side applications.

With isomorphic JavaScript, you can work with React JS application and fetch the rendered HTML file which is generally rendered by the browser. This very HTML file is being executed for everyone who tries to search for the particular app, along with Google bots.

When it comes to client-side scripting, the application can use this HTML file and continue to operate the same on the browser. The data is added using JavaScript if required, the isomorphic app still remains dynamic.

Isomorphic applications ensure that the client is able to operate the scripts or not. While JavaScript is not active, the code is rendered on the server, and the browser can fetch all the meta tags and text in HTML and CSS files.

However, developing real-time isomorphic applications is such a challenging and complex task. But, here these two frameworks can make the process of the same pretty quicker and simpler: Gatsby and Next.js.

Gatsby is an open-source compiler that allows developers to create robust and scalable web applications. But, its biggest issue is that it does not offer server-side rendering. It generates a static website and then creates HTML files to store it in the cloud.

Next.js is the framework of React that helps developers create React applications without any hindrance. It also enables automatic code splitting and hot code reloading, too. Let’s learn how Next.js goes with server-side rendering.

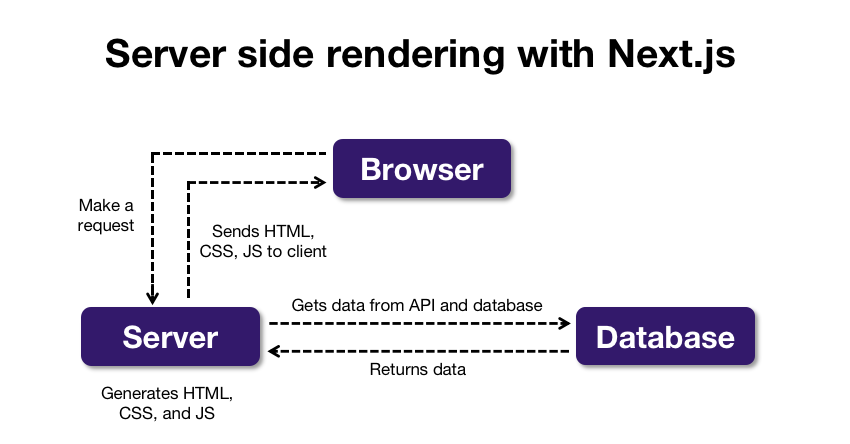

Server-side rendering with Next.js

If a single-page application is what you have decided to go with, then server-side rendering is the best way to improve page ranking in search results. As Google bots easily index and rank pages that are rendered on the server. For server-side rendering, the ideal choice for implementing it is Next.js: a react framework.

Next.js is a server that converts JavaScript files to HTML and CSS files and enables Google bots to fetch the data and put it on view on the search engines to fulfill the request from the client-side.

Pros of Server Side Rendering:

- Server-side rendering makes your website’s pages instantly available to interact with your users.

- Not only search engines, but It also optimizes web pages for social media.

- It’s pretty useful for SEO.

- Server-side rendering comes up with plenty of benefits that enhance the app’s UI.

Cons of Server Side Rendering:

- Slower page transitions

- Server side is generally much more costly than that of pre-rendering

- Higher latency

- Pretty complex catching

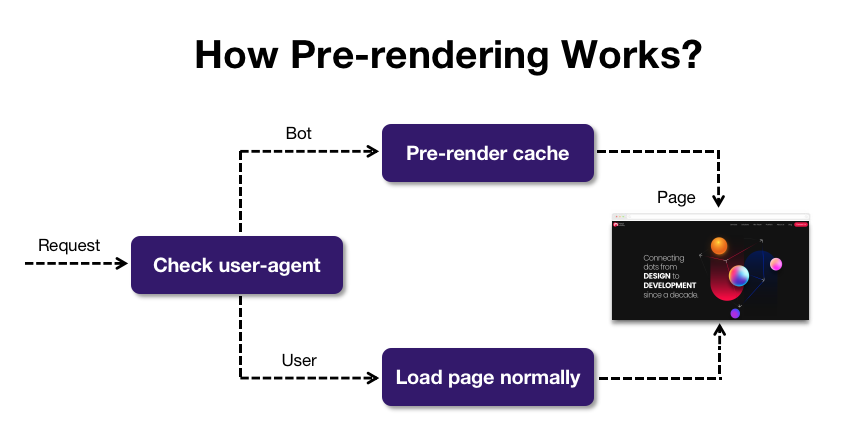

- Pre-rendering

Pre-rendering is yet another option for making your web apps visible with a higher ranking. The pre-renderers do get to know if the search bots or crawlers are detecting your web pages, then they provide a cached static HTML version of your website. If a user sends the request, the normal page will be loaded.

The process behind fetching the HTML pages from the server is pre-rendering. All HTML pages are uploaded already and cached in advance with Headless chrome, this makes the process for developers pretty smooth and easy.

Also, everything is all set for the developers, they do not need to do any changes to the existing codebase, or if the changes occur, they are pretty minor.

Pre-renders can transform any JavaScript code into basic HTML, but if the data keeps changing frequently, then they might not work properly.

Pros of Pre-rendering:

- Pre-rendering is quite easier to implement

- It runs every JavaScript file by transforming it to static HTML

- Pre-rendering approach requires least codebase changes

- Goes good with trending web novelties

Cons of Pre-rendering:

- Its services are not for free

- It’s not ideal for the pages that get the data updated every now and then

- It takes a lot of time if the website is extensive and has many pages.

- You need to develop a pre-rendered page every time you update its content

Choosing either of the options is ultimately beneficial for you, you will need to decide which is the best suited for your venture.

Best Practices to Make Your React Website SEO-friendly

Finally, here comes our segment where you will get to find all the solutions for React SEO, let’s get started:

- Building static or dynamic web applications

As we have discussed before, SPA (single-page applications) are often difficult to be fetched by Google when it comes to SEO. Static or dynamic web apps come to rescue you as they use server-side rendering which helps Google pods crawl your website smoothly.

Well, you do not necessarily need to choose the SPA always. It mainly depends on the marketplace you are in.

For example, if every page of your website has something valuable for the user, then the dynamic website is your choice. Or if you are planning to promote your landing pages, then a static website is something you should opt for.

- URL case

Google bots always consider some pages separate when their URLs have lowercase or uppercase (/Invision and /invision).

Now, these two URLs will be considered different due to the difference in their case. For avoiding these common blunders, always try to generate your URL in lowercase.

- 404 code

Be it any page with an error in the data, they all run a 404 code. So, try to set up files in server.js and route.js as soon as you can. Updating the files with server.js or route.js can relatively augment traffic on your web app or website.

- Try not to use hashed URLs

Well, this is not the major issue but the Google bot does not see anything after the hash in URLs. Let’s show you something:

https://domain.com/#/shop

https://domain.com/#/about

https://domain.com/#/contactGoogle bot is generally not going to see it after the hash, https:/domain.com/ is enough for the bot to crawl it.

Best React SEO Optimization Tools

Learn about the best React SEO optimization tools, that make the process of SEO and development a lot easier:

- React Helmet

React helmet is a library that allows you to deal with Google bots and social media crawlers seamlessly. It adds meta tags to your pages on React so your web app helps with more important information to the crawlers.

import React from 'react';

import {Helmet} from "react-helmet/es/Helmet";

import ProductList from '../components/ProductList';

const Home = () => {

return (

<React.Fragment>

<Helmet>

<title>title</title>

<link rel="icon" href={"path"}/>

<meta name="description" content={"description"}/>

<meta name="keywords" content={"keyword"}/>

<meta property="og:title" content={"og:title"}/>

<meta property="og:description" content={"og:description"}/>

</Helmet>

<ProductList />

</React.Fragment>

)};

export default Home;- React Router

The problem to optimize the react web apps is the React SPAs. Single-page applications are a great source of comfort for the users. However, you can make great utilization of the SPA model with certain SEO rules and elements in your pages.

Hence, we should create URLs in a way that opens in separate pages by using React Router hooks in URLs.

Blog Source- https://www.mindinventory.com/blog/react-seo-best-practices/